Table of Contents

The following document paraphrases and quotes material originally contained in the NASA Systems Engineering Handbook NASA-SP-2007-6105-Rev-1 Section 4.0 System Design (page 31)

System engineering design is a highly iterative and recursive processes, resulting in a validated set of requirements and a validated design solution that satisfies a set of customer expectations.

- Customer[1] Expectations (Program/Project Objectives and Mission Profile) and Constraints (Stakeholders, Institutional, Professional, etc.).

- High Level Functional Requirements[2] (Level 1 Program/Project)

- Functional and Logical decompositions (Project WBS)

- Trade Studies and Iterative Design Loop

- Form Creative Design Solution (System PBS)

- Define Level 2 System and Subsystem Performance Requirements

- Make Hardware and/or Software Model(s) and Perform Experiments

- Organize and Analyze Data

- Does Functional & Performance Analysis show design will meet Functional Design and concept of operations (ConOps) Requirements?

- If additional detail need, Repeat Process

- Select a preferred design

- Does the system work[3] (functional and performance)?

- Is the system achievable within cost and schedule and other constraints?

- If the answer is no, reenter design loop (Step 4), reorganize project (Step 3), or adjust Customer’s Expectations and Constraints (Step 1) and start again.

- Preparing presentations (PDR and CDR)

- Communicate Results (PDR and CDR)

- Reports, plans, and specifications. (Project Planning)

- Implement, Verify, and Validate the design. (Project Implementation)

The Iterative Nature of the System Design Process

After Mission Authorization (i.e. funding), the process Starts with a study team collecting and clarifying the Customer’s Expectations, including the program objectives, constraints, design drivers, mission profile[2], and criteria for defining mission success.

From the customer’s expectations high-level requirements are defined. These high-level requirements drive an iterative design loop where (1) creative “strawman” architecture/designs, (2) the concept of operations (ConOps), and (3) derived system and subsystem requirements are developed.

From the customer’s expectations high-level requirements are defined. These high-level requirements drive an iterative design loop where (1) creative “strawman” architecture/designs, (2) the concept of operations (ConOps), and (3) derived system and subsystem requirements are developed.

This process will require iterations and design decisions to achieve consistency (design loop blocks 1 – 3). Once consistency is achieved, analyses allows the project team to validate the design against the customer’s expectations. A simplified validation asks the questions:

- Does the system work (functional and performance)?

- Is the system achievable within cost and schedule constraints? [3]

The output of this step will typically result in modification of the customer’s expectations and the process starts again. This process continues until the system—architecture, mission profile, and requirements and stakeholder expectations achieve consistency.

The number of iterations must be sufficient to allow analytical verification of the design to the requirements.

The design process is hierarchical and recursive by nature with the same process applied to the next level down in the program – one person’s subsystem is another person’s project.

System Design Keys

- Successfully understanding and translation of the customer’s expectations into clear unambiguous quantitative, verifiable, and realizable program requirements

- Complete and thorough requirements traceability (including requirement flow-down)

- Document all decisions made during the development of the original design concept[4].

- Visualization of the product in use (ConOps) looking for missed level 1 requirements. Rapid Prototyping also helps us discover missing level 1 requirements (ex. ShopVac).

- Design validation is a continuing recursive and iterative process occurring over the entire life cycle of the product

| Note: It is extremely important to involve the customer in all phases of a project. Such involvement should be built in as a self-correcting feedback loop that will significantly enhance the chances of mission success. Involving customer in a project builds confidence in the end product and serves as a validation and acceptance with the target audience. |

ConOps Examples

Remember, validation occurs over the life of the product (from concept to retirement).

Design faults discovered before the operational phase of the project.

- On the prosthetic arm project, conOps showed that we had failed to conceptualize the soldier in McDonalds. Specifically, in appearance and the potential noise generated by the system. In both cases, unwanted attention of fellow customers would have been be placed on the soldier.

- On the solar panel project, conOps showed that we had failed to conceptualize operation of the rover remotely, in the desert or ultimately on Mars. Once discovered telemetry requirements were added to the project.

Design faults discovered during the operational phase of the project.

- After successful verification of the Pick and Place machine we tried to put it in a cabinet. Clearly, we did not conceptualize this step in the life-cycle of the product. All future projects now include this customer requirement.

- … it emerged that the design of Type 45 destroyers have faulty engines unable to operate continuously in warm waters. “The UK’s enduring presence in the Gulf should have made it a key requirement for the engines. The fact that it was not was an inexcusable failing and one which must not be repeated,” the MPs’ report said. Source: BBC “Royal Navy ‘woefully low’ on warships”

Customer Expectations (Project Objectives and Mission Profile)

Sponsor presents Program and/or Mission Objectives

- These are not requirements, but rather a statement of a problem to be solved. They may be qualitative in nature.

High Level Requirements (Level 1 Program/Project)

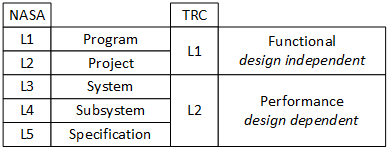

Reference: NASA Systems Engineering Handbook Section 4.2 Technical Requirements Definition Start (page 40) and Appendix C page A: 279

- Translate Program and/or Mission Objectives into Level 1 Program/Project Requirements.

- Work with and get the sponsors concurrence that these meet their Program and/or Mission Objectives.

- It is important to document all decisions made during these early phases of the project. Include links to source material and how decisions were made.

- Were the equations used to calculate a requirement provided and are the answers correct?

- Close attention to this process is the difference between the customer saying “you said” and your company paying to correct the problem within the agreed upon schedule, versus you telling the customer your new requirement is out-of-scope and your customer paying the increased cost with attendant schedule delay[1].

Form Creative Solutions

The word “creative” appears less than a half dozen times in the 360 page NASA Systems Engineering Handbook. I will therefore rely on outside sources.

Logical decompositions (Level 2 Systems)

The following document paraphrases and quotes material originally contained in the NASA Systems Engineering Handbook NASA-SP-2007-6105-Rev-1 Section 4.3 Logical Decomposition (page 49)

“Logical Decomposition is the process for creating the detailed functional requirements that enable NASA programs and projects to meet the stakeholder expectations. This process identifies the “what” that must be achieved by the system at each level to enable a successful project. Logical decomposition utilizes functional analysis to create a system architecture and to decompose top-level (or parent) requirements and allocate them down to the lowest desired levels of the project.”

Design solutions – Experiments and Modeling (Level 2 Subsystems)

TBD

[1] For more on this subject see Week 3 “Requirements” document on page 10.

Program Design Verification and Validation

Source: NASA Systems Engineering Handbook NASA-SP-2007-6105-Rev-1, Section 5.3 Product Verification

While the approaches taken to verify and validate a design may be similar, the reason behind the tests are different. Verification tests confirm that Project Objectives for the design (ex. the robot) as defined by its level 1 and level 2 design requirements are met. Validation tests confirm that the design can accomplish its mission. For our robots this is defined by the Mission Profile.

|

Here is a simple way to understand and remember the difference between verification and validation.

Verification – was the product built right?

Validation – was the right product built?

|

Like the design process, verification and validation are hierarchical by nature with the same process applied to the next level down in the program – one person’s subsystem is another person’s project.

Requirements Verification Matrix

Source: NASA Systems Engineering Handbook NASA-SP-2007-6105-Rev-1, Appendix D: Requirements Verification Matrix

When developing requirements, it is important to identify an approach for verifying the requirements. The requirement verification matrix defines how all design requirements are verified. The matrix should identify each requirement by a unique identifier and be definitive as to the source. The example shown provides the minimum information that should be included in the verification matrix.

Table D‑1 Simplified Requirements Verification Matrix

| Requirement No. | Paragraph | Shall Statement | Verification Success Criteria | Verification Method | Results | PASS / FAIL |

| P-1 | 3.2.1.1 Capability: Support Uplinked Data (LDR) | System X shall provide a max. ground-to-station uplink of… | 1. System X locks to forward link at the min and max data rate tolerances 2. System X locks to the forward link at the min and max operating frequency tolerances | Test | ||

| P-i | Other paragraphs | Other “shalls”in PTRS | Other criteria | xxx | ||

| S-i or other unique designator | Other paragraphs | Other “shalls”in specs, ICDs, etc. | Other criteria | xxx |

- Unique identifier for each System X requirement. The identifier should indicate the document the System X requirement is contained within. If more than 1 include a key (P = PDR, C = CDR)

- Paragraph number of the System X requirement.

- Summary Text (within reason) of the System X requirement, i.e., the “shall.”

- Success criteria for the System X requirement.

- Verification method for the System X requirement (analysis, inspection, demonstration, or test). If not verified in ECS-316 then indicate facility or laboratory used to perform the verification and validation (home, ECS-315).

- Indicate documents that contain the objective evidence that requirement was satisfied

- Simple PASS/FAIL

Program Validation

Source: NASA Systems Engineering Handbook NASA-SP-2007-6105-Rev-1, Appendix E: Creating the Validation Plan (Including Validation Requirements Matrix)

When developing a design, it is important to identify a validation approach for demonstrating that the system (robot) can accomplish its intended mission. Validation testing is typically defined and conducted by the customer.

To simplify the course, a single validation grade will be assigned at the end of the mission.

Terminology

High-Level Program/Project Requirements and Expectations: These would be the top-level requirements and expectations (e.g., needs, wants, desires, capabilities, constraints, and external interfaces) for the product(s) to be developed. (Section 4.1.1.3)

ConOps: Concept of Operations – This describes how the system will be operated during the life-cycle phases to meet stakeholder expectations. It describes the system characteristics from an operational perspective and helps facilitate an understanding of the system goals. (Section 4.1.1.3)

SE: Systems Engineering is the art and science of developing an operable system capable of meeting requirements within often opposed constraints. Systems engineering is a holistic, integrative discipline, wherein the contributions of structural engineers, electrical engineers, mechanism designers, power engineers, human factors engineers, and many more disciplines are evaluated and balanced, one against another, to produce a coherent whole that is not dominated by the perspective of a single discipline. (Section 2.0 Fundamentals of System Engineering)